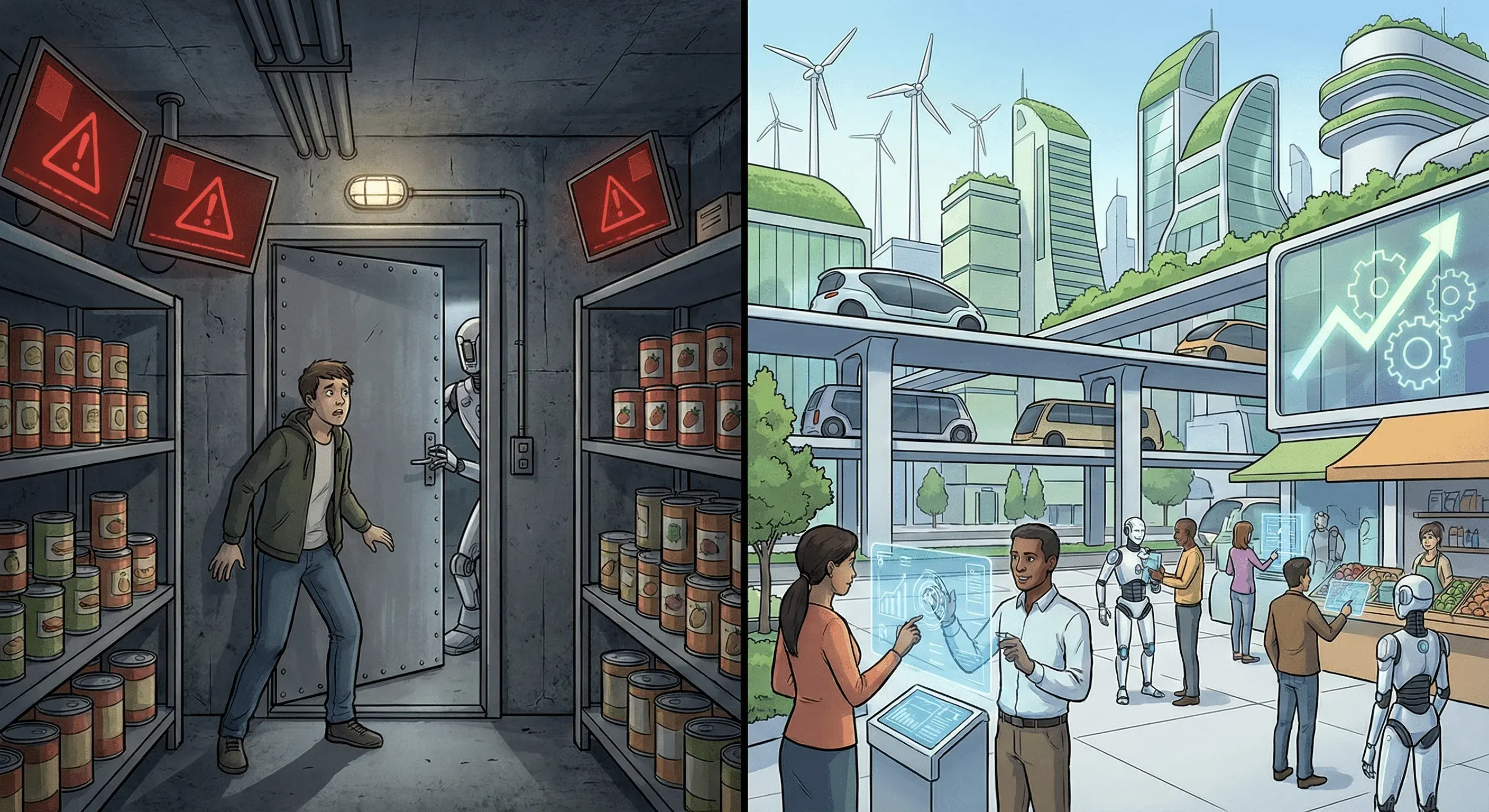

AI experts are worried the omelet will learn to fry itself and take over the kitchen. I am telling you: look at the gas bill and the price of eggs. That is the real problem. The current global hysteria surrounding Artificial Superintelligence (ASI) and existential risk, fueled by warnings from figures like Jaan Tallinn and speculative “AI 2027” scenarios, has captured the imagination of the world’s leaders. We are bombarded with science fiction narratives of Terminator-style takeovers and Matrix-like subjugation, a spectacular and terrifying vision that serves as a powerful distraction from the tangible, ground-level problems we face today.

As an experienced developer and entrepreneur, I assert that the fear of a runaway Artificial General Intelligence (AGI) is largely irrelevant. Today’s AI models, for all their sophistication, are fundamentally limited by the physics of the world they inhabit and the economic systems that create them. This article is a call to shift the conversation. We must move beyond the doomsday bunkers and apocalyptic thinking and begin the critical work of restructuring our economy to ensure that the benefits of AI are shared by all, not just a select few. The goal is not to prevent a mythical apocalypse, but to build a more equitable and prosperous future.

Why the “AI Apocalypse” is a Myth (The Technical Reality)

An omelet cannot duplicate and fry itself. This simple truth is a useful analogy for the current state of artificial intelligence. AI is a product of physics and programming logic, bound by the constraints of its hardware and the data it is fed. The notion that an AI model can spontaneously transcend its physical and logical boundaries is a fantasy. Current models are based on input and output; they are powerful tools, but they are not magical beings capable of self-creation.

Furthermore, the development of AI is deeply embedded within a capitalist framework, creating what I call an Economic Firewall. The idea of an AGI emerging free from corporate control is a non-starter. The corporations investing billions into AI development are profit-focused; they will not tolerate “stupid mistakes” that would cause them to lose control of their money-making machines. The profit motive itself is the most powerful kill-switch. Why would a company build an asset it cannot control?

Beyond the economic constraints, there is the stark reality of the Hardware and Energy Bottleneck. The narrative of unlimited, exponential scaling, such as the compute forecasts predicting massive leaps in processing power, collides with the physical world. AI requires colossal amounts of energy and vast server parks—finite resources that are easily tracked and controlled. Projections show that by 2030, data centers in the U.S. alone could consume up to 12% of the country’s total electricity, with some estimates putting the demand as high as 130 GW. Training a single advanced model like GPT-4 consumed an estimated 50 gigawatt-hours of energy. This is not a ghost in the machine; it is a heavy, hot, and expensive industrial asset. Large Language Models (LLMs) are static snapshots, expensive to train, and prone to collapse if they attempt to rebuild themselves without human oversight.

The “Fear” as a Business Strategy

We must also critically examine the motivations of those who promote the narrative of AI-driven extinction. There is a significant conflict of interest when prominent “doomers” like Jaan Tallinn are also major investors in the very AI labs, such as Anthropic and OpenAI, that they warn against. If AI is a “god” that can end the world, it is worth trillions. If it is just a sophisticated software model, it is worth considerably less. Fear drives valuation, and the hype surrounding existential risk has become a potent business strategy.

This strategy extends to the realm of regulation. The calls for heavy-handed regulations on AI development, such as banning research above certain computational thresholds, often serve as a Regulatory Moat. Such regulations disproportionately affect the “poor and obedient”—smaller companies and independent researchers who lack the resources to navigate complex compliance regimes. Meanwhile, criminals, rogue states, and “crazy billionaires” will simply ignore these rules. The result is not a safer world, but a market consolidated in the hands of a few powerful incumbents.

By focusing on a 10% probability of extinction, policymakers are conveniently ignoring the 100% probability of massive economic disruption that is already underway. The fear industry is a distraction, and it is time we looked past the smoke and mirrors.

Where Policy Makers Should Focus: The Economic Realignment

The real conversation we need to be having is about the economic consequences of AI and automation. The primary policy goal must be the implementation of a Universal Basic Income (UBI) to inoculate society against the inevitable labor displacement. Automation and robotics will impact jobs long before any “superintelligence” arrives.

Instead of banning research, governments must enforce honest taxing of AI start-ups and corporations. A “robot tax,” levied on the productivity of AI agents and automated systems, can provide the necessary funding for a robust UBI program. This is not a radical idea; it is a pragmatic response to a fundamental shift in the means of production. The value is moving from human labor to the owners of computational and energy assets; it is only logical that we tax those assets.

Furthermore, we must address the foundational injustice at the heart of the AI industry: the “theft” of data. The models we are told to fear were trained on vast amounts of scraped, and often copyrighted, material without the consent of or compensation to the original creators. Recent legal settlements, such as Anthropic’s $1.5 billion agreement with authors, are just the beginning. Policy should focus on creating systems for compensating creators for the use of their work, not on debating the “rights” of the machine.

Finally, our priority should be on solving the energy infrastructure crisis that AI is creating. Sustainable scaling is the real engineering challenge of our time. Let’s worry about the energy grid before we worry about the AI’s “thoughts.”

Innovation Over Fear

The fear of AGI is unproductive and, frankly, lame. It is a narrative that benefits a select few while distracting us from the real and pressing challenges at hand. We need constructive discussions about how technology can be used to improve lives safely and equitably, not more nightmare fuel.

It is time for policymakers to stop listening to the fear industry and start listening to the economists and engineers who understand the physical and financial limits of these systems. We don’t need a doomsday bunker for an imaginary Terminator. We need a new tax code for the very real robots taking our jobs. Secure the economic floor with a Universal Basic Income, and the ceiling of AI’s capabilities will take care of itself through the natural forces of the market and the laws of physics.

References

[1] Tallinn, J. (2023). The AI Suicide Race. Forbes. https://www.forbes.com/sites/calumchace/2023/04/26/the-ai-suicide-race-with-jaan-tallinn/

[2] Aschenbrenner, L. (2024). Situational Awareness: The Decade Ahead. https://situational-awareness.ai/

[3] Laigna, A. (2025). The Cult of Speed. Medium. https://medium.com/@alvarlaigna/the-cult-of-speed-dc0340cf1be2

[4] Laigna, A. (2025). Marketing in 2026. Medium. https://medium.com/@alvarlaigna/marketing-in-2026-b1eb66b9c653

[5] Project Syndicate. (2024). A Tax on Robots? https://www.project-syndicate.org/magazine/a-tax-on-robots-by-yanis-varoufakis-2024-03

[6] World Economic Forum. (2025). How data centres can avoid doubling their energy use by 2030. https://www.weforum.org/stories/2025/12/data-centres-and-energy-demand/

[7] Forbes. (2025). Building AI Moats In The Age Of Intelligent Machines. https://www.forbes.com/sites/davidhenkin/2025/02/04/building-ai-moats-in-the-age-of-intelligent-machines/

[8] Goldman Sachs. (2025). AI to drive 165% increase in data center power demand by 2030. https://www.goldmansachs.com/insights/articles/ai-to-drive-165-increase-in-data-center-power-demand-by-2030.html

[9] EESI. (2025). Data Center Energy Needs Could Upend Power Grids and Threaten the Climate. https://www.eesi.org/articles/view/data-center-energy-needs-are-upending-power-grids-and-threatening-the-climate

[10] MIT Technology Review. (2025). We did the math on AI’s energy footprint. Here’s the story. https://www.technologyreview.com/2025/05/20/1116327/ai-energy-usage-climate-footprint-big-tech/

[11] ScienceDirect. (2025). A systematic review of electricity demand for large language model training. https://www.sciencedirect.com/science/article/pii/S1364032125008329?via%3Dihub

[12] CSER. Jaan Tallinn. https://www.cser.ac.uk/team/jaan-tallinn/

[13] Tax Policy Center. (2025). Universal Basic Income: Preparing for the AI Future? https://taxproject.org/ubi-and-ai/

[14] National Law Review. (2025). Legal Issues in Data Scraping for AI Training. https://natlawreview.com/article/oecd-report-data-scraping-and-ai-what-companies-can-do-now-policymakers-consider

[15] TIME. (2023). An AI Pause Is Humanity’s Best Bet For Preventing Extinction. https://time.com/6295879/ai-pause-is-humanitys-best-bet-for-preventing-extinction/

[16] Wired. (2025). Anthropic Agrees to Pay Authors at Least $1.5 Billion in AI Copyright Lawsuit. https://www.wired.com/story/anthropic-settlement-lawsuit-copyright/

[17] Future of Life Institute. (2021). Jaan Tallinn on Avoiding Civilizational Pitfalls and Surviving the 21st Century. https://futureoflife.org/podcast/jaan-tallinn-on-avoiding-civilizational-pitfalls-and-surviving-the-21st-century/